Important Update

The below post was based off of conversations that occurred in the community, and what can only be considered at best to be incomplete information. It’s important to me that I’m part of a solution to the miscommunication problems that got us to this point.

James Petty, who is the CEO of the DevOps Collective just issued a post on this subject that clears up a lot of the misconceptions and miscommunications. It’s pretty short, so I’ll reproduce it here to make sure you definitely see it before looking at the rest of this post.

The decision was made to partner with Pluralsight to do our video recordings in 2020. Pluralsight has offered to save us $40K-$50K (per event) by having their team do the recordings of the session. There was never any condition on their part that the recordings be hosted exclusively behind their paywall, this decision was made by the DevOps Collective. In fact, other events that they work with have their session recordings available to the public for free. After better understanding everyone’s perspectives on the subject, we’ve indicated to Pluralsight that we would like to use a different model instead, and they’re very happy to oblige us. They’ve made it clear that they’re happy to work with an event on whatever terms that event needs for its community, and we appreciate their flexibility and willingness to support us.

Give us a few days to get everything worked out and will make an announcement soon on the 2020 session recordings.

I appreciate the tough spot that James and his crew were put into here. This unraveled really quickly, and there are no easy solutions to the problems the Summit organizers are working to solve.

The vast majority of the uproar in the PowerShell community over the last couple of days has been based off the incorrect understanding that “Pluralsight will own the recordings and will decide what happens to them - probably going to put them behind a pay wall”. As James’ post outlines, this isn’t the case. It also appears that nothing is nearly as written in stone as many folks in the community previously thought it was. The right move at this point seems to be to chill out and trust that the good people behind all of the organizations involved will do the right thing. I’m all for holding people and organizations accountable, but there’s obviously a lot more about this situation that needs to be settled and communicated with the public. For my part in perpetuating the misinformation surrounding this subject, I apologize. Hopefully readers understand my original post below was based off of the information I had at the time, and appreciate my effort to correct it here.

The entire original post is below, with only a couple of formatting changes. The content itself is unchanged, since I don’t think there’s any point erasing it. Besides, my whole blog is on GitHub Pages so it’s all on the internet forever and ever anyway.

Here I go with my second post of 2019. I’ve certainly wanted to be more prolific, but it’s hard to blog about the work I perform daily for reasons I won’t get into. I’m really hopeful that I’ll return to an “every other week” cadence, but… we’ll see. Anyway, there’s been some news that I’m feeling compelled to provide my thoughts on.

In case you missed it, the folks who put on the PowerShell + DevOps Global Summit have just announced some changes about the recordings for the 2020 event. Simply put, in previous years, the DevOps Collective (the folks who organize and put on the Summit) have paid to record the sessions presented at the event with funds from sponsors and ticket sales. Those recordings have been uploaded to their YouTube channel and are therefore obviously shared for free with the community forever. In 2020, however, Pluralsight has purchased the rights to the recordings of these sessions and appears intent on hosting them behind a pay wall. Only people who attended the Summit and people with a Pluralsight subscription will be able to watch the recordings.

Full disclosure: I have some kind of a relationship with both Pluralsight and the DevOps Collective. I have authored two courses on Azure Automation for Pluralsight which I was paid for and continue to receive royalties on. You should watch them if you want to learn about Azure Automation. I am really proud of them. Occasionally, I buy and sell tiny amounts of Pluralsight stock. I’ve also presented at the previous two PowerShell Summits, and am looking forward to presenting again in 2020. I’ve received a stipend from the Summit for the session I presented in 2018, but since joining Microsoft, such compensation has ceased.

These changes to how the recordings from Summit are being handled have sparked a lot of discussion, and now I shall try to assemble my thoughts in one post. One thing that should be made absolutely clear is that although people are very passionate about this matter, and the community reaction has been largely negative, neither I nor anybody I’ve spoken with about this hold any anger towards the people behind these decisions on either side. I am lucky to know many of them personally and have nothing but great things to say about them. That said, there’s a lot to unpack here.

The Good

The good news here is there’s more money involved and that enables a lot of good things.

Good for Pluralsight

The obvious win here for Pluralsight is that they get exclusive access to incredible content shared by some of the most brilliant minds in this field. The content shared by Summit speakers is regularly the best new technical video content shared every year. Events like Summit, PSConfEU, PSConfAsia, and a small handful of user groups, are the only places you can depend on seeing these kinds of in-depth, intensely technical sessions. Putting this content behind a pay wall is clearly a way to generate revenue for Pluralsight. If you sign up for a trial to watch Summit recordings, maybe you’ll stick around and watch some other courses, and convert to being a paying customer.

Good for DevOps Collective

Warren’s post linked above indicates that the DevOps Collective will save about $100,000 USD between their two 2020 events. That’s a ton of money for this non-profit. Warren does a great job of outlining the benefits for Summit in his post, so I won’t reiterate them here, but I completely trust that the DevOps Collective is doing their absolute best to use these funds to put on the best event possible.

Professional recordings of conference sessions is expensive. Unless you’ve tried to organize recordings for your own conference, it definitely costs more than however much you think it costs. I don’t know exactly why companies are able to charge so much money to record conference sessions, but they do. This isn’t even just a Bellevue thing. It’s expensive to record conferences everywhere in the world.

Good for Speakers

This is the group I identify with. The best part of this decision for speakers is that there are going to be more of us in 2020. The compensation for speaking at Summit is a free ticket to Summit. The more free speaker tickets the DevOps Collective gives away, the more other attendees and sponsors have to pay to help cover those costs. More speakers (hopefully) means a more diverse group on stage, and therefore a broader exposure of thoughts and ideas for everyone.

More speakers also means that each speaker may present fewer sessions. Personally, I love the idea of presenting as many times as the organizers will let me, but that’s obviously self-indulgent, and also probably not great for the aforementioned diversity of ideas and thoughts. Speaking at Summit is a Big Deal™, especially for folks who are less established in the community, and if speaker stress can be reduced by limiting the number of multi-session presenters, then that’s a good thing which obviously costs money.

Having recorded examples of technical sessions is a big deal for speakers. It’s big for one’s personal brand, their status in the community as a trusted source of information, and is a key element in a speaker’s work to get more sessions accepted at more events. The YouTube uploads of the Summit session recordings usually get a few hundred to a few thousand views. The DevOps Collective doesn’t have a ton of resources to spend marketing them, and so it falls on the speakers to spread their session recordings around. It stands to reason that a for-profit company with a more obvious financial interest in making sure these recordings get watched would put more effort and resources into marketing. Although the recordings would be behind a pay wall, it’s possible (but not proven) that more people would actually see them.

By “community” I mean people who may or may not be attending Summit. If you’re attending Summit, you’ll get access to the recordings. This is huge because Summit runs multiple sessions at the same time and it’s often impossible to choose between different sessions that run against each other. I’ve personally had the experience of building my schedule, having a hard time choosing a session for a specific time slot, and then realizing I should probably attend the session that I was going to be presenting. Having a substantial financial interest in making sure that session recordings are available for paying customers and Summit attendees is in the best interest of Pluralsight, which assures Summit attendees that some of the “do I go here or there” challenges aren’t such a big deal because the recordings will be available after.

If you’re not attending Summit, there’s no good here for you at all. Speaking of which, now for…

The Bad

No matter which way you look at this, putting the Summit session recordings behind a pay wall has some absolutely impossible to ignore drawbacks.

Bad for Pluralsight

I’ll be blunt, not out of anger, but out of clarity: Pluralsight looks greedy here.

But, why shouldn’t they be? Pluralsight is a publicly traded company with a fiduciary responsibility to its shareholders to make decisions that are profitable. A somewhat modest, transparent display of greed isn’t really something that I personally fault Pluralsight for. They saw a business opportunity and seized it, negotiating terms that were favorable for Pluralsight. That’s exactly what they’re supposed to do.

Pluralsight will own the rights to the recordings of Summit sessions, and has made it clear that Pluralsight subscribers and Summit attendees will be able to watch them. Right now, it appears that these are the only groups of people for whom Summit session recordings will be made available, although nobody has actually come out and said publicly that the general community at large won’t be able to watch them. Pluralsight has some content available to watch for free, and there’s an opportunity here for Pluralsight to add the Summit session recordings to that library. One compromise that I’m personally a fan of is for Pluralsight to host the Summit sessions for its members and Summit attendees only for a few months, and then make them public.

Bad for DevOps Collective

Being blunt again not out of anger, but out of clarity: The DevOps Collective looks like they sold out.

All of the wonderful things that the DevOps Collective plans to do with this money aside, they’re a community-oriented non-profit who just silently sold an enormously valuable community resource to a for-profit company. This is a bad look. As a non-profit whose existential purpose is to contribute, and help others to contribute to the PowerShell and DevOps community, this is objectively a huge step in another direction.

Everybody who’s dug into this issue totally understands that recording conference sessions is expensive and complicated. Everybody deeply appreciates the financial and human resources that the DevOps Collective puts into making these recordings available to the community. Everybody also wants the DevOps Collective to continue making that investment and make sure these sessions are donated to the community. This is a “stuck between a rock and a hard place” scenario for the DevOps Collective, but right now the general public opinion appears to be that they chose wrong.

Bad for Speakers

Many speakers feel blind-sided by this. It appears that details of this arrangement were still being finalized while the CFP was going on, and so the first speakers heard of the recordings being put behind a pay wall was when they found out their session proposal was accepted and they read the speaker agreement. Some speakers I’ve spoken to have already decided they are going to retract their proposal, and many more are considering the same. I’m not among those who are considering pulling out of Summit, but I can see where they’re coming from.

Speakers Are Paying To Create Content For A For-Profit Company

My biggest issue with this whole thing is that speakers are being taken advantage of. When you agree to speak at Summit, you agree to cover your own travel and expenses. You are compensated with a free ticket to Summit, and that’s it. Possibly there will be a modest stipend, but that’s not part of the agreement. This adds up to a scenario where a speaker (or their employer) is paying thousands of dollars in travel, lodging, meals, time away from the office, etc. in exchange for the opportunity to speak at Summit, engage with other attendees, and to enjoy all the other sessions.

In this new situation, however, now a speaker or their employer is paying thousands of dollars for all of that, but a significant product of that investment is now a source of revenue for a for-profit company instead of a donation made to the technical community. Speakers are effectively paying to create content for a for-profit company. I can’t express clearly or emphatically enough how much I dislike this.

Since I work at Microsoft, it costs me nothing to speak at Summit. I live in the area, my employer gives me the time to attend the event. I suppose the expense I take on is some of my free time goes towards making my sessions as good as I can. In the past I’ve taken vacation time, and paid all my own expenses in order to speak at Summit. I did that with the understanding that me speaking at Summit was good for me (exposure, personal brand), good for the conference (unique content), and good for the entire community (watch my content). Nobody profited off my expenditures.

Obviously, the general public got to view these recordings for free and now they have to pay. Pretty cut and dry here. I feel like I’ve sold the quality of the technical content shared at Summit already, and won’t re-hash it here.

The Bottom Line

There is good and bad for everybody here. More money being involved means that the event itself benefits, and so do the people directly connected to the event, including the speakers and attendees. The people who are victims of this situation are the general community who’s not attending Summit, and the speakers. That’s right, speakers both win and lose here.

It’s a complicated scenario with a lot of different perspectives to consider. I don’t envy anybody involved in these decisions. While I think the DevOps Collective made a mistake, there is a chance for Pluralsight to “do the right thing” and make the recordings public on their site (perhaps after a period of time), and “donate” them to the technical community.

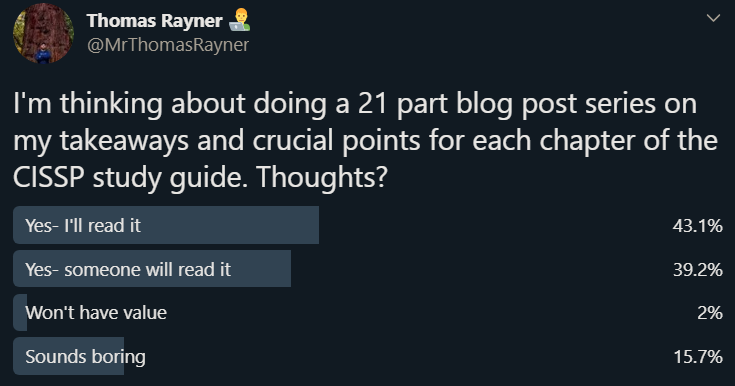

If you have thoughts on this new agreement that Pluralsight and the DevOps Collective have entered into regarding the 2020 recordings of the Summit sessions, especially if you like the idea of making the recordings public after a period of time of paid access, tweet at @PSHSummit, @PSHOrg, @Pluralsight, and use the hashtags #PSHDevOps and #PSHSummit. Tag me too, @MrThomasRayner. Please also join us in the Conferences channel on the unified/bridged PowerShell community Discord and Slack.

From there, type in the name of the cmdlet, and the search will start to populate. Let’s see when the Expand-Archive cmdlet was introduced.

From there, type in the name of the cmdlet, and the search will start to populate. Let’s see when the Expand-Archive cmdlet was introduced.

I got licked in the face by a wolf. Her name is Flora.[/caption]

I got licked in the face by a wolf. Her name is Flora.[/caption] There’s a couple more good ones above my hair line. She got a little close to my eye.[/caption]

There’s a couple more good ones above my hair line. She got a little close to my eye.[/caption]

Will and myself at MVP Summit 2016, preparing content for the 10th anniversary of PowerShell celebration.[/caption]

Will and myself at MVP Summit 2016, preparing content for the 10th anniversary of PowerShell celebration.[/caption]